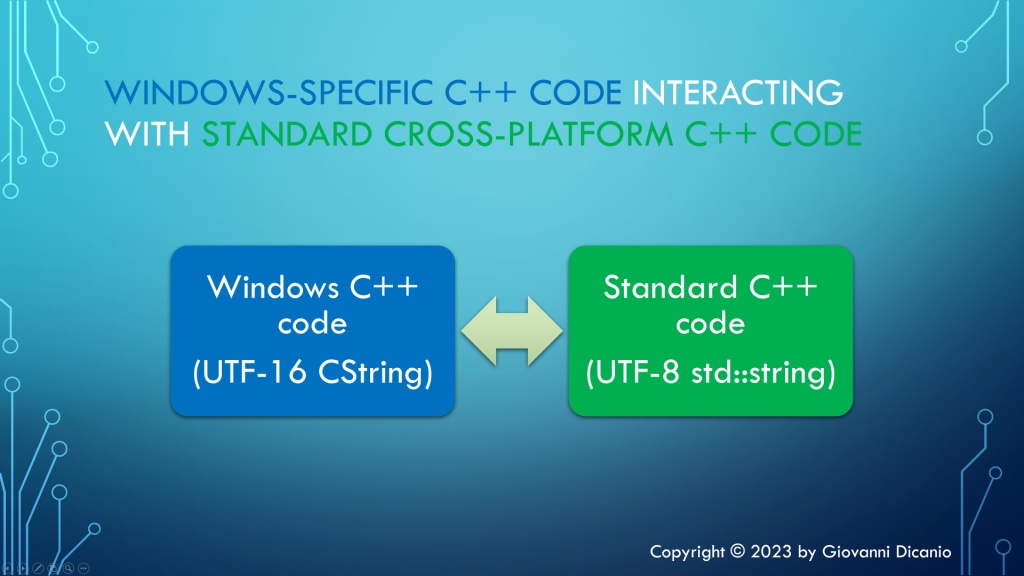

Last time we saw how to convert text between Unicode UTF-16 and UTF-8 using a couple of ATL helper classes (CW2A and CA2W). While this can be a good initial approach to “break the ice” with these Unicode conversions, we can do better.

For example, the aforementioned ATL helper classes create their own temporary memory buffer for the conversion work. Then, the result of the conversion must be copied from that temporary buffer into the destination CStringA/W’s internal buffer. On the other hand, if we work with direct Win32 API calls, we will be able to avoid the intermediate CW2A/CA2W’s internal buffer, and we could directly write the converted bytes into the CStringA/W’s internal buffer. That is more efficient.

In addition, directly invoking the Win32 APIs allows us to customize their behavior, for example specifying ad hoc flags that better suit our needs.

Moreover, in this way we will have more freedom on how to signal error conditions: Throwing exceptions? And what kind of exceptions? Throwing a custom-defined exception class? Use return codes? Use something like std::optional? Whatever, you can just pick your favorite error-handling method for the particular problem at hand.

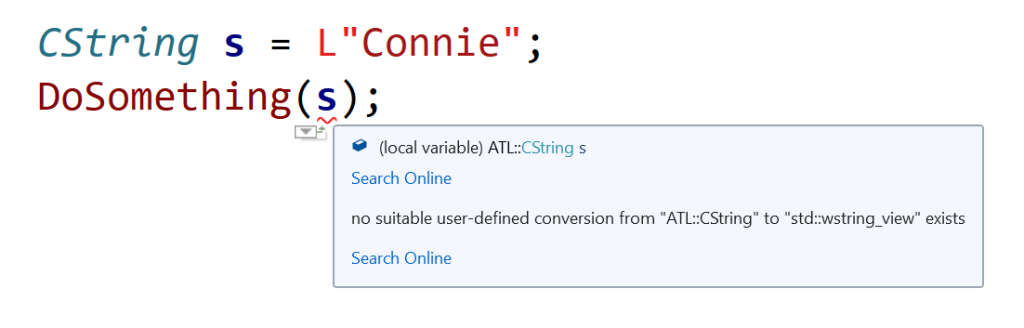

So, let’s start designing our custom Unicode UTF-16/UTF-8 conversion functions. First, we have to pick a couple of classes to store UTF-16-encoded text and UTF-8-encoded text. That’s easy: In the context of ATL (and MFC), we can pick CStringW for UTF-16, and CStringA for UTF-8.

Now, let’s focus on the prototype of the conversion functions. We could pick something like this:

// Convert from UTF-16 to UTF-8

CStringA Utf8FromUtf16(CStringW const& utf16);

// Convert from UTF-8 to UTF-16

CStringW Utf16FromUtf8(CStringA const& utf8);

With this coding style, considering the first function, the “Utf16” part of the function name is located near the corresponding “utf16” parameter, and the “Utf8” part is near the returned UTF-8 string. In other words, in this way we put the return on the left, and the argument on the right:

CStringA resultUtf8 = Utf8FromUtf16(utf16Text);

// ^^^^^^^^^^^^^^^ <--- Argument: UTF-16

CStringA resultUtf8 = Utf8FromUtf16(utf16Text);

// ^^^^^^^^^^^^^^^^^ <--- Return: UTF-8

Another approach is something more similar to the various std::to_string overloads implemented by the C++ Standard Library:

// Convert from UTF-16 to UTF-8

CStringA ToUtf8(CStringW const& utf16);

// Convert from UTF-8 to UTF-16

CStringW ToUtf16(CStringA const& utf8);

Let’s pick up this second style.

Now, let’s focus on the UTF-16-to-UTF-8 conversion, as the inverse conversion is pretty similar.

// Convert from UTF-16 to UTF-8

CStringA ToUtf8(CStringW const& utf16)

{

// TODO ...

}

The first thing we can do inside the conversion function is to check the special case of an empty input string. In this case, we’ll just return an empty output string:

// Convert from UTF-16 to UTF-8

CStringA ToUtf8(CStringW const& utf16)

{

// Special case of empty input string

if (utf16.IsEmpty())

{

// Empty input --> return empty output string

return CStringA();

}

Now let’s focus on the general case of non-empty input string. First, we need to figure out the size of the result UTF-8 string. Then, we can allocate a buffer of proper size for the result CStringA object. And finally we can invoke a proper Win32 API for doing the conversion.

So, how can you get the size of the destination UTF-8 string? You can invoke the WideCharToMultiByte Win32 API, like this:

// Safely fail if an invalid UTF-16 character sequence is encountered

constexpr DWORD kFlags = WC_ERR_INVALID_CHARS;

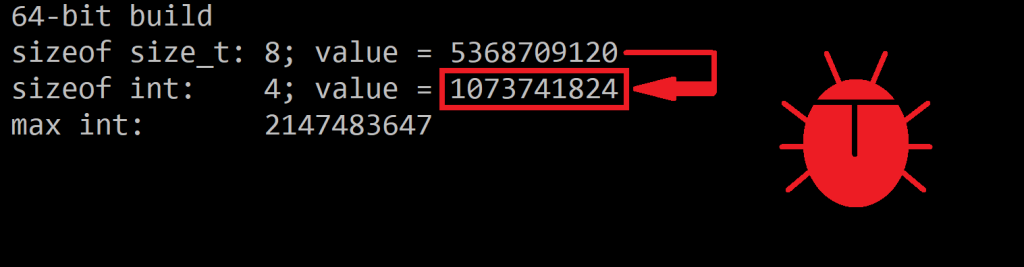

const int utf16Length = utf16.GetLength();

// Get the length, in chars, of the resulting UTF-8 string

const int utf8Length = ::WideCharToMultiByte(

CP_UTF8, // convert to UTF-8

kFlags, // conversion flags

utf16, // source UTF-16 string

utf16Length, // length of source UTF-16 string, in wchar_ts

nullptr, // unused - no conversion required in this step

0, // request size of destination buffer, in chars

nullptr, nullptr // unused

);

Note that the interface of that C Win32 API is non-trivial and error prone. Anyway, after reading its documentation and doing some tests, you can figure the parameters out.

If this API fails, it will return 0. So, here you can write some error handling code:

if (utf8Length == 0)

{

// Conversion error: capture error code and throw

AtlThrowLastWin32();

}

Here I used the AtlThrowLastWin32 function, which basically invokes the GetLastError Win32 API, converts the returned DWORD error code to HRESULT, and invokes AtlThrow with that HRESULT value. Of course, you are free to define your custom C++ exception class and throw it in case of errors, or use whatever error-reporting method you like.

Now that we know how many chars (i.e. bytes) are required to represent the result UTF-8-encoded string, we can create a CStringA object, and invoke its GetBuffer method to allocate an internal CString buffer of proper size:

// Make room in the destination string for the converted bits

CStringA utf8;

char* utf8Buffer = utf8.GetBuffer(utf8Length);

ATLASSERT(utf8Buffer != nullptr);

Now we can invoke the aforementioned WideCharToMultiByte API again, this time passing the address of the allocated destination buffer and its size. In this way, the API will do the conversion work, and will write the UTF-8-encoded string in the provided destination buffer:

// Do the actual conversion from UTF-16 to UTF-8

int result = ::WideCharToMultiByte(

CP_UTF8, // convert to UTF-8

kFlags, // conversion flags

utf16, // source UTF-16 string

utf16Length, // length of source UTF-16 string, in wchar_ts

utf8Buffer, // pointer to destination buffer

utf8Length, // size of destination buffer, in chars

nullptr, nullptr // unused

);

if (result == 0)

{

// Conversion error

// ...

}

Before returning the result CStringA object, we need to release the buffer allocated with CString::GetBuffer, invoking the matching ReleaseBuffer method:

// Don't forget to call ReleaseBuffer on the CString object!

utf8.ReleaseBuffer(utf8Length);

Now we can happily return the utf8 CStringA object, containing the converted UTF-8-encoded string:

return utf8;

} // End of function ToUtf8

A similar approach can be followed for the inverse conversion from UTF-8 to UTF-16. This time, the Win23 API to invoke is MultiByteToWideChar.

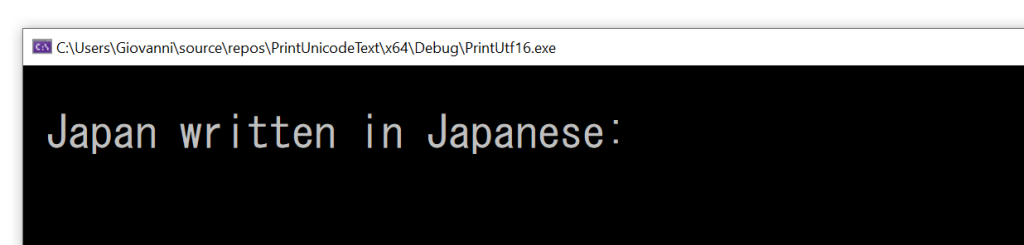

Fortunately, you don’t have to write this kind of code from scratch. On my GitHub page, I have uploaded some easy-to-use C++ code that I wrote that implements these two Unicode UTF-16/UTF-8 conversion functions, using ATL CStringW/A and direct Win32 API calls. Enjoy!